position parameter. Corrections to sort, reshape, reserve, trim_capacity, move assign, sort, erase/erase_if, splice, reshape, copy constructor, copy assign. Move constructor definition added.*this' clause is removed. Heading corrections. Formatting corrections.*this") to match current standard ie. "T shall be

Cpp17MoveInsertable into hive" becomes "T is Cpp17MoveInsertable into

*this". Added "This operation may change capacity()" to

remarks in reshape. sort: Throws section removed as this is covered by

blanket wording and by Remarks and the post-Remarks note. Specifically the

following line is removed: "Throws: bad_alloc if it fails to

allocate any memory necessary for this operation. comp may

also throw." Note: forward_list::sort and list::sort do not include such a

line.

The purpose of a container in the standard library cannot be to provide the optimal solution for all scenarios. Inevitably in fields such as high-performance trading or gaming, the optimal solution within critical loops will be a custom-made one that fits that scenario perfectly. However, outside of the most critical of hot paths, there is a wide range of application for more generalized solutions.

Hive is a formalisation, extension and optimization of what is typically known as a 'bucket array' or 'object pool' container in game programming circles. Thanks to all the people who've come forward in support of the paper over the years, I know that similar structures exist in various incarnations across many fields including high-performance computing, high performance trading, 3D simulation, physics simulation, robotics, server/client application and particle simulation fields (see this google groups discussion, the hive supporting paper #1 and appendix links to prior art).

The concept of a bucket array is: you have multiple memory blocks of elements, and a boolean token for each element which denotes whether or not that element is 'active' or 'erased' - commonly known as a skipfield. If it is 'erased', it is skipped over during iteration. When all elements in a block are erased, the block is removed, so that iteration does not lose performance by having to skip empty blocks. If an insertion occurs when all the blocks are full, a new memory block is allocated.

The advantages of this structure are as follows: because a skipfield is used, no reallocation of elements is necessary upon erasure. Because the structure uses multiple memory blocks, insertions to a full container also do not trigger reallocations. This means that element memory locations stay stable and iterators stay valid regardless of erasure/insertion. This is highly desirable, for example, in game programming because there are usually multiple elements in different containers which need to reference each other during gameplay, and elements are being inserted or erased in real time. The only non-associative standard library container which also has this feature is std::list, but it is undesirable for performance and memory-usage reasons. This does not stop it being used in many open-source projects due to this feature and its splice operations.

Problematic aspects of a typical bucket array are that they tend to have a

fixed memory block size, tend to not re-use memory locations from erased

elements, and utilize a boolean skipfield. The fixed block size (as opposed to

block sizes with a growth factor) and lack of erased-element re-use leads to

far more allocations/deallocations than is necessary, and creates memory waste

when memory blocks have many erased elements but are not entirely empty. Given

that allocation is a costly operation in most operating systems, this becomes

important in performance-critical environments. The boolean skipfield makes

iteration time complexity at worst O(n) in capacity(), as there is

no way of knowing ahead of time how many erased elements occur between any two

non-erased elements. This can create variable latency during iteration. It also

requires branching code for each skipfield node, which may cause performance

issues on processors with deep pipelines and poor branch-prediction failure

performance.

A hive uses a non-boolean method for skipping erased elements, which allows for more-predictable iteration performance than a bucket array and O(1) iteration time complexity; the latter of which means it meets the C++ standard requirements for iterators, which a boolean method doesn't. It has an (optional - on by default) growth factor for memory blocks and reuses erased element locations upon insertion, which leads to fewer allocations/reallocations. Because it reuses erased element memory space, the exact location of insertion is undefined. Insertion is therefore considered unordered, but the container is sortable. Lastly, because there is no way of predicting in advance where erasures ('skips') may occur between non-erased elements, an O(1) time complexity [ ] operator is not possible and thereby the container is bidirectional but not random-access.

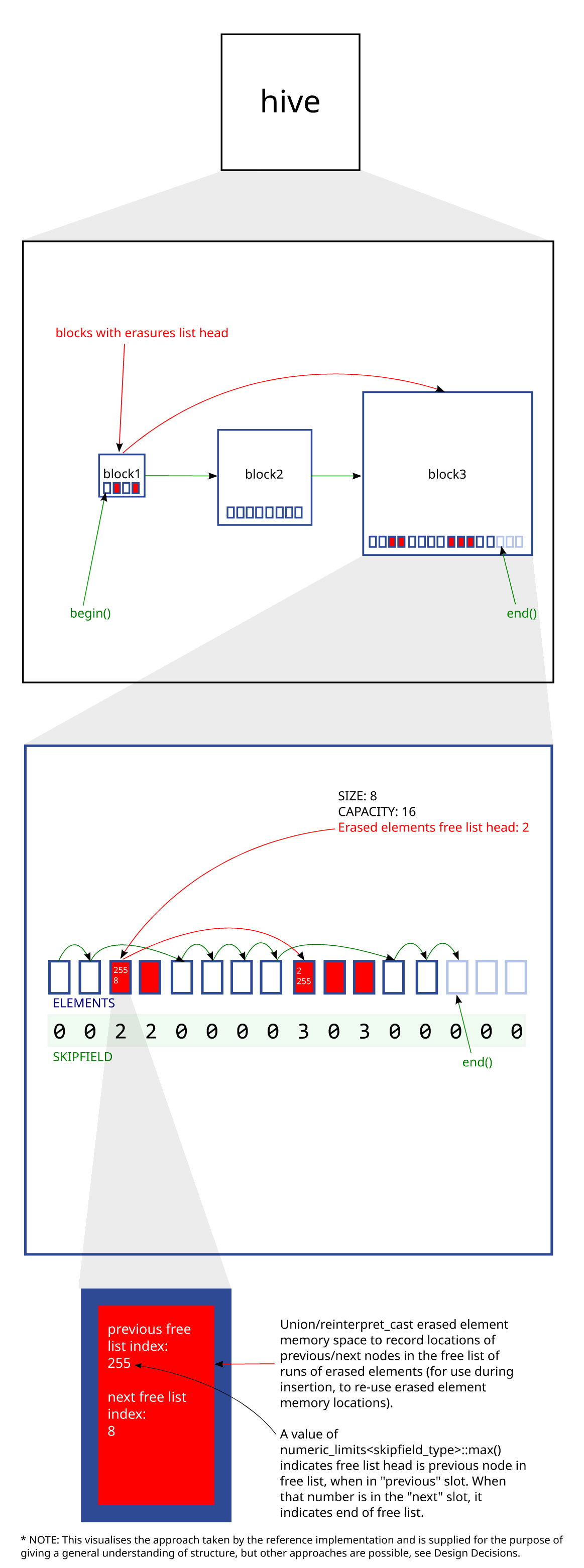

There are two patterns for accessing stored elements in a hive: the first is to iterate over the container and process each element (or skip some elements using advance/prev/next/iterator ++/-- functions). The second is to store an iterator returned by insert() (or a pointer derived from the iterator) in some other structure and access the inserted element in that way. To better understand how insertion and erasure work in a hive, see the following diagrams.

The following demonstrates how insertion works in a hive compared to a vector when size == capacity.

The following images demonstrate how non-back erasure works in a hive compared to a vector.

There is additional introductory information about the container's structure in this CPPcon talk, though much of its information is out of date (hive no longer uses a stack but a free list instead, benchmark data is out of date, etcetera), and more detailed implementation information is available in this CPPnow talk. Both talks discuss the precursor for std::hive, called plf::colony.

For the purposes of the non-technical-specification sections of this document, the following terms are defined:

There are situations where data is heavily interlinked, iterated over frequently, and changing often. An example is the typical video game engine. Most games will have a central generic 'entity' or 'actor' class, regardless of their overall schema (an entity class does not imply an ECS). Entity/actor objects tend to be 'has a'-style objects rather than 'is a'-style objects, which link to, rather than contain, shared resources like sprites, sounds and so on. Those shared resources are usually located in separate containers/arrays so that they can re-used by multiple entities. Entities are in turn referenced by other structures within a game engine, such as quadtrees/octrees, level structures, and so on.

Entities may be erased at any time (for example, a wall gets destroyed and no longer is required to be processed by the game's engine, so is erased) and new entities inserted (for example, a new enemy is spawned). While this is all happening the links between entities, resources and superstructures such as levels and quadtrees, must stay valid in order for the game to run. The order of the entities and resources themselves within the containers is, in the context of a game, typically unimportant, so an unordered container is okay. More specific requirements for game engines are listed in the appendices.

But the non-fixed-size container with the best iteration performance in the standard library, vector, loses pointer validity to elements within it upon insertion, and pointer/index validity upon erasure. This leads towards sophisticated and often restrictive workarounds when developers attempt to utilize vector or similar containers under the above circumstances.

std::list and the like are not suitable due to their poor memory locality, which leads to poor cache performance during iteration. This does not stop them from being used extensively. This is however an ideal situation for a container such as hive, which has a high degree of memory locality. Even though that locality can be punctuated by gaps from erased elements, it still works out better in terms of iteration performance than all other standard library containers other than deque/vector, regardless of the ratio of erased to non-erased elements (see benchmarks). It is also in most cases faster for insertion and (non-back) erasure than current standard library containers.

As another example, particle simulation (weather, physics etcetera) often involves large clusters of particles which interact with external objects and each other. The particles each have individual properties (eg. spin, speed, direction etc) and are being created and destroyed continuously. Therefore the order of the particles is unimportant, what is important is the speed of erasure and insertion. No current standard library container has both strong insertion and non-back erasure performance, so again this is a good match for hive.

Reports from other fields suggest that, because most developers aren't aware of containers such as this, they often end up using solutions which are sub-par for iterative performance such as std::map and std::list in order to preserve pointer validity, when most of their processing work is actually iteration-based. So, introducing this container would both create a convenient solution to these situations, as well as increasing awareness of this approach. It will ease communication across fields, as opposed to the current scenario where each field uses a similar container but each has a different name for it (object pool, bucket array, etcetera).

This is purely a library addition, requiring no changes to the language.

The three core aspects of a hive from an abstract perspective are:

Each element block houses multiple elements. The metadata about each block may or may not be allocated with the blocks themselves and could be contained in a separate structure. This metadata must include at a minimum, the number of non-erased elements within each block and the block's capacity - which allows the container to know when the block is empty and needs to be removed from the sequence, and also allows iterators to judge when the end of a block has been reached, given the starting point of the block.

It should be noted that most of the data associated with the skipping mechanism and erased-element recording mechanisms should be per-element-block and independent of subsequent/previous element blocks, as otherwise you would create unacceptably variable latency for any fields involving timing sensitivity. Specifically with a global data set for either, erase would likely require all data subsequent to a given element block's data to be reallocated, when an element block is removed from the iterative sequence. Insert would likewise require reallocation of all data to a larger memory space when hive capacity expanded.

In the original reference implementation (current reference implementation is here) the specific structure and mechanisms have changed many times over the course of development, however the interface to the container and its time complexity guarantees have remained largely unchanged. So it is likely that regardless of specific implementation, it will be possible to maintain this interface without obviating future improvement.

The current reference implementation implements the 3 core aspects as follows. Information about known alternative ways to implement these is available in the appendices.

In the reference implementation this is essentially a doubly-linked list of 'group' structs containing (a) a dynamically-allocated element block, (b) element block metadata and (c) a dynamically-allocated skipfield. The element blocks and skipfields have a growth factor of 2. The metadata includes information necessary for an iterator to iterate over hive elements, such as that already mentioned and information useful to specific functions, such as the group's sequence order number (used for iterator comparison operations). This linked-list approach keeps the operation of removing empty element blocks from the sequence at O(1) time complexity.

The reference implementation uses a skipfield pattern called the low complexity jump-counting pattern. This encodes the length of runs of contiguous erased elements (skipblocks) into a skipfield which allows for O(1) time complexity during iteration (see the paper above for details). Since there is no branching involved in iterating over the skipfield aside from end-of-block checks, it is less problematic computationally than a boolean skipfield (which has to branch for every skipfield read) in terms of CPUs which don't handle branching or branch-prediction failure efficiently (eg. Core2).

The reference implementation utilizes the memory space of erased elements to form a per-element-block index-based

doubly-linked free list of skipblocks, which is used during subsequent insertion.

Each element block has a 'free list head' as a metadata member. The free lists are index-based rather

than pointer-based in order to reduce the amount of space necessary to store

the 'previous' and 'next' list links in an erased element's memory. The

beginning and end of the free lists are marked using

numeric_limits<skipfield_type>::max() in the 'previous'

and 'next' indexes, respectively. If the free list head is equal to this number

this means there are no erasures in that element block. Since this number is reserved that means element block capacities cannot be larger than numeric_limits<skipfield_type>::max() ie. 255 elements instead of 256 for 8-bit skipfield types, as otherwise the free list would be unable to address a skipblock comprised only of the last element in the block.

These per-element-block free lists are combined with a doubly-linked pointer-based intrusive list of blocks with erased elements in them, the head of which is stored as a member variable in hive. The combination of these two things allows re-use of erased element memory space in O(1) time.

More information on these approaches, and alternative approaches to the 3 core aspects, is available to read in the alt implementation appendix.

Iterators are bidirectional in hive but also provide constant time

complexity >, <, >=, <= and <=> operators for convenience

(eg. in for loops when skipping over multiple elements per loop

and there is a possibility of going past a pre-determined end element). This is

achieved by keeping a record of the relative order of element blocks. In the

reference implementation this is done by assigning a number to each memory

block in its metadata. In an implementation using a vector of pointers to

groups instead of a linked list, one can simply use the position of the

pointers within the vector to determine this. Comparing the relative order of

two iterators' blocks, then comparing the memory locations of the elements

which the iterators point to (if they happen to be within the same memory

block), is enough to implement all comparisons.

Iterator implementations are dependent on the approach taken to core aspects 1 and 2 as described above. The reference implementation's iterator stores a pointer to the current 'group' struct, plus a pointer to the current element and a pointer to its corresponding skipfield node. It is possible to replace the element and skipfield pointers with a single index value, but benchmarks have shown this to be slower despite the increased memory cost.

The reference implementation's ++ operation is as shown below, following the low-complexity jump-counting pattern's algorithm:

-- operation is the same except both step 1 and 2 involve subtraction rather than addition and step 3 checks to see if the element pointer is now before the beginning of the element block instead of beyond the end of it. If it is before the beginning of the block it traverses to the back element of the previous group's element block, and subtracts the value of the back skipfield node from the element pointer and skipfield pointer.

We can see from the above that every so often iteration will involve a transistion to the next/previous element block in the hive's sequence of active blocks, depending on whether we are doing ++ or --. Hence for every element block transition, 2 reads of the skipfield are necessary instead of 1.

advance, prev and nextFor these functions, complexity is dependent on the state of the hive instance, position of the iterator and the amount of distance to travel, but in many cases will be less than linear, and may be constant. To explain: it is necessary in a hive to store, for each element block, both capacity metadata (for the purpose of iteration) and metadata about how many non-erased elements are present (ie. size, for the purpose of removing blocks from the iterative chain once they become empty). For this reason, intermediary blocks between the iterator's initial block and its final destination block (if these are not the same block, and are not immediately adjacent) can be skipped rather than iterated linearly across, by using the size metadata.

This means that the only linear time operations are any iterations within the initial block and the final block. However if either the initial or final block have no erased elements (as determined by comparing whether the block's capacity and size metadata are equal), linear iteration can be skipped for that block and pointer/index math used instead to determine distances, reducing complexity to constant time. Finally, if the iterator points to the first element in that element block, and distance is greater-or-equal-to the block's size, we can treat it as an intermediary block and just skip it, subtracting size from the distance we want to travel. Hence the best case for this operation is constant time, the worst is linear in the distance.

distance(first, last)The same considerations which apply to advance, prev and next also apply to distance - intermediary element blocks between first and last's blocks can be skipped in constant time and their size metadata added to the cumulative distance count, while first's block and last's block (if they are not the same block) must be linearly iterated across unless either block has no erased elements, in which case the operation becomes pointer/index math and is reduced to constant time for that block. If first and last are in the same block but are in the first and last element slots in the block, distance can again be calculated from the block's size metadata in constant time. If they are not in the same block but first points to the first element in its block, the first block can be skipped and its size added to the distance travelled. Likewise if last points to the last element in its block, the last block can also be skipped and its size added.

iterator insert/emplaceInsertion can re-use previously-erased element memory locations when available, so position of insertion is effectively random unless no previous erasures have occurred, in which case all elements will likely be inserted linearly to the back of the container in the majority of implementations. If inserting to the back this invalidates iterators pointing to end(). It could also potentially insert before begin(), if erasures have occurred at the beginning of the container.

While it is not mandated to do so, hive implementations will generally insert into existing element blocks when able, and create a new element block only when all existing element blocks are full.

If hive is implemented as a vector of pointers to element blocks instead of a linked list of element blocks, creation of a new element block would occasionally involve expanding the pointer vector, itself O(n) in the number of blocks, but this is within amortized limits since it is only occasional.

void insertFor range, fill and initializer_list insertion, it is not possible to guarantee that all the elements inserted will be sequential in the hive's sequence, and so it is not considered useful to return an iterator to the first inserted element. There is a precedent for this in the various std:: map containers. Therefore these functions return void.

The same considerations regarding iterator invalidation in singular insertion above, also applies to these insertion styles.

For multiple insertions an implementation can call reserve() in advance, reducing the number of allocations necessary (whereas repeated singular insertions would generally follow the implementation's block growth factor, and possibly allocate more and smaller element blocks than necessary). This has no effect on time complexity which is still linear in the number elements inserted.

iterator erase(const_iterator

position)Erasure is a simple matter of destructing the element in question and updating whatever data is associated with the erased-element skipping mechanism. No reallocation of subsequent elements is necessary and hence the process is O(1). Updates to the erased-element recording and skipping mechanisms are also required to be O(1).

When an element block becomes empty of non-erased elements it must be freed to the OS (or reserved for future insertions, depending on implementation) and removed from the hive's sequence of active blocks. If it were not, we would end up with non-O(1) iteration, since there would be no way to predict how many empty element blocks were between the current element block being iterated over, and the next element block with non-erased elements in it.

In a linked-list-of-blocks style of implementation this removal is always O(1). However if the hive were implemented as vector of pointers to element blocks, this could, depending on implementation, trigger an O(n) relocation of subsequent block pointers in the vector (a smart implementation would only do this occasionally, using erase_if - see the alt implementation appendix). Hence this operation is O(1) amortized.

Under what circumstances element blocks are reserved rather than deallocated is implementation-defined - however given that small memory blocks have low cache locality compared to larger ones, from a performance perspective it is best to only reserve the largest blocks currently allocated in the hive. In my benchmarking, reserving both the back and 2nd-to-back element blocks while ignoring the actual capacity of the blocks themselves seemed to have the most beneficial performance characteristics out of other techniques attempted.

There are three main performance advantages to retaining back blocks as opposed to just any block - the first is that these will be, under most circumstances, the largest blocks in the hive (given the growth factor). An exception to this is when splice is used, which may result in a smaller block following a larger block (implementation-dependent). The second advantage is that in situations where erasures and insertions are occurring at the back of the hive (this assumes no erased element locations in other memory blocks, which would most likely be used for the insertions) continuously and in quick succession, retaining the back block avoids large numbers of deallocations/reallocations. The third advantage is that deallocations of these larger blocks can, in part, be moved by the user to non-critical code regions via trim_capacity(). Though ultimately if the user wants total control of when allocations and deallocations occur they would need to use a custom allocator.

Lastly, the reason for returning an iterator is: if an erasure empties an element block of elements, the block will be deallocated or reserved - in either case, it's no longer part of the iterative sequence and an iterator pointing into it, such as position, can no longer be used for iteration. This is important for erasing inside a loop.

iterator erase(const_iterator first,

const_iterator last)The same considerations for singular erasure above also apply for range-erasure. In addition, ranged erasure is O(n) if elements are non-trivially-destructible. If they are trivially-destructible, we can follow similar logic to the distance specialization above. Which is to say, for the first and last element blocks in the range, if the number of elements in either block are equal to their capacity, there are no erasures in the block and we may be able to - depending on the erased-element-skipping-mechanism - simply notate a new skipblock without needing to deal with any existing skipblocks. If there are erasures in that element block, we would (implementation-dependent) likely need to identify whether the range we're erasing contains erased elements in between the non-erased elements, in order to update metadata (such as number of non-erased elements in the block) correctly.

For intermediary blocks between the first and last blocks, for trivially-destructible types we can simply deallocate or reserve these without calling the destructors of elements or dealing with the erased-element skipping/recording mechanisms for those blocks. As with distance, if the first iterator points to the first element in its element block, the first block can be treated like an intermediary block - likewise for the last block, if the last iterator points to the last element in its element block. Hence for trivially-destructible types, the entire operation can be linear in the number of blocks contained within the range or linear in the number of elements contained within the range, or somewhere in between.

As with singular erasure, in a vector-of-pointers-to-blocks style of implementation, there may be a need to reallocate element block pointers backward when blocks becomes empty of elements.

Lastly, specifying a return iterator for range-erase may seem pointless,

as no reallocation of elements occurs in erase for hive, so the return

iterator will almost always be the last const_iterator of the

first, last pair. However if

last was end(), the new value of

end() will be

returned. In this case either the user intentionally submitted end() as

last, or they incremented an iterator pointing to the final

element in the hive and submitted that as last. The latter is

the only valid reason to return an iterator from the function, as it may

occur as part of a loop which is erasing elements which ends when

end() is reached. If end() is changed by the

erasure, but the iterator used in the loop

does not accurately reflect end()'s new value, that iterator

could iterate past end() and the loop would never end.

void reshape(std::hive_limits

block_limits)This function updates the block capacity limits in the hive with user-defined ones and, if necessary, changes any active blocks which fall outside of those limits to be within the limits (and deallocates any reserved blocks outside of the limits - although an implementation could choose to allocate new reserved blocks if they wanted). A program will not compile if the function is used with non-copyable/movable types. It will invalidate pointers/iterators/references to if reallocation of elements to other element blocks occurs.

The order of elements post-reshape is not guaranteed to be stable, in order to allow for optimizations. Specifically: in the instance where a given element element block does not fit within the limits supplied, the elements within that element block could be reallocated to previously-erased element locations in other element blocks which do fit within the limits supplied. Or they could be reallocated to the back of the final element block, if it fits within the limits, or into reserved blocks if they fit within the limits.

If the existing current limits fit within the new user-supplied ones, no

checking of block capacities is needed and the operation is O(1).

If they do not but existing blocks may fit within the limits, all blocks

need to be checked, making the operation O(n) in the number of blocks (both

active and reserved). If any blocks containing elements don't fit within

the supplied limits reallocation will occur and the operation is at worst O(n) in

capacity().

static constexpr std::hive_limits block_capacity_hard_limits() noexceptAs opposed to block_capacity_limits() which returns the current min/max element block capacities for a given instance of hive, this allows the user to get any implementation's min/max 'hard' lower/upper limits for element block capacities ie. the limits which any user-supplied limits must fit within. For example, if an implementation's hard limit is 3 elements min, 1 million elements max, all user-supplied limits must be >= 3 and <= 1 million.

This is useful for 2 reasons:

static constexpr std::hive_limits block_capacity_default_limits() noexceptLikewise, this returns the default limits for a given hive and type/allocator.

This is useful for 2 reasons:

void clear()User expectation was that clear() would erase all elements but not deallocate element blocks. Therefore all active blocks are emptied of elements and become reserved blocks. If deallocation of memory blocks is desired, a clear() call can be followed by a trim_capacity() call. For trivially-destructible types element destruction can be skipped and depending on implementation the process may be O(1).

iterator get_iterator(const_pointer p) noexcept

const_iterator get_iterator(const_pointer p) const noexceptBecause hive iterators could be large, potentially storing three

pieces of data - eg. pointers to: current element block, current element

and current skipfield node - a program storing many links to

elements within a hive may opt to dereference iterators to get pointers and

store those instead of iterators, to save memory and improve performance

via reduced cache use. This function reverses that process, giving an

iterator which can then be used for operations such as erase.

A get_const_iterator function was fielded as a workaround for the

possibility of someone wanting to supply a non-const pointer and get a

const_iterator back, however as_const fulfills this same role

when supplied to get_iterator and doesn't require expanding

the interface of hive. Likewise it was decided to use const_pointer's because if a user wants to supply a non-const pointer they can use as_const,

whereas there is no meaningful equivalent process to convert const_pointer to pointer.

Note that this function is only guaranteed to return an iterator that

corresponds to the pointer supplied - it makes no checks to see whether the

element which p originally pointed to is the same element

which p now points to (eg. from an ABA scenario). Resolving

this problem is down to the end user and could involve having a unique id

within elements or similar (more info in the frequently-asked questions appendix).

Technically, a precondition of the function is that p points to an

element in *this, and does not point to an erased element, otherwise behaviour is undefined. This is

due to the "lifetime

pointer zap" issue ie. reading the value of a pointer to an erased

element is undefined behaviour in C++. In practice this is usually non-problematic and many fields are fine with this situation. The reference implementation returns

end() when p is not an element in

*this and it is possible that other implementations may do the

same. LEWG decided to remove this as an Effect of the function due to the

UB mentioned.

Note 1: in order to check whether a given element is erased when an implementation is using the low-complexity jump-counting pattern, the additional operations specified under "Parallel processing" in that paper must be followed.

Note 2: get_iterator compares pointers against the start and end

memory locations of the active blocks in

*this. There was some confusion that this would be problematic

due to obscure rules in the standard which state that a given platform may

allow addresses inside of a given memory block to essentially not be

contiguous, at least in terms of the std::less/std::greater/>/</etc

operators. According to Jens Maurer, these difficulties can be bypassed via

hidden channels between the library implementation and the compiler.

void shrink_to_fit()A decision had to be made as to whether this function should, in the

context of hive, be allowed to reallocate elements (as std::vector

implementations tend to do) or simply trim off reserved blocks (as

std::deque implementations tend to do). Due to the fact that a large hive

memory block could have as few as one remaining element in a large active

block after a series of erasures, it makes little sense to only trim reserved

blocks, so instead a shrink_to_fit reallocates all elements to

as few active blocks as possible in order to increase cache locality during

iteration and reduce memory usage. It cannot guarantee that size() ==

capacity() after the operation, because the min/max block capacity

limits of *this may prevent that.

One potential implementation is fairly brute-force - create a new temporary hive, reserve(size() of original hive), copy/move all elements from the original hive into the temporary, then operator = && the temporary into the original. A more astute implementation might allocate a temporary array detailing the full capacity and unused capacity of each block, then use some procedure to move elements out of some blocks and into as few of the existing blocks as possible, filling up any erased element locations and/or unused space at the back of the hive and only allocating new element blocks as-necessary. The latter approach is also why the order of elements post-reshape is not guaranteed to be stable.

void trim_capacity()

void trim_capacity(size_type n)The trim_capacity() command was also introduced as a way to free reserved blocks which had been previously created via reserve() or

transformed from active blocks to reserved blocks via erase(), without

reallocating elements and invalidating iterators as shrink_to_fit() does.

The second overload was introduced as a way of allowing the user to say "I

want to retain at least n capacity while freeing reserved blocks, so that I

have room for future insertions without having to allocate again". This

means the user doesn't have to know how much unused capacity is in (a)

unused element memory space in the back block, (b) unused

element memory space from prior erasures, or (c) reserved blocks. They

just say how much they want to retain, and the implementation will free as

much of the the remainder (capacity() - n) as possible if

there are suitable reserved blocks available to deallocate.

void sort()Although the container has unordered insertion, there may be circumstances where sorting is desired. Because hive uses bidirectional iterators, using std::sort or other random access sort techniques is not possible. Therefore an internal sort routine is supplied, bringing it in line with std::list. An implementation of the sort routine used in the reference implementation of hive can be found in a non-container-specific form here - see that page for the technique's advantages over the usual sort algorithms for non-random-access containers. An allowance is made for sort to allocate memory if necessary, so that algorithms such as this can be used. Erased element memory space and reserved blocks can also be used as temporary sorting memoery instead of, or as well as, allocating. Since memory allocation is unspecified but allowed for std::list::sort/std::forward_list::sort, this is not necessary, just courtesy.

void unique();

template <class BinaryPredicate>

size_type unique(BinaryPredicate binary_pred); Likewise, if a container can be sorted, unique() may be of use post-sort. Optimal implementation of unique involves calling the range-erase function where possible, as range-erase has potentially constant time depending on the state of the given blocks, as opposed to calling single-element erase() repeatedly which would be at worst O(n - 1).

void splice(hive &x)

void splice(hive &&x) *this

+ the number of blocks in x)

Whether x's active blocks are transferred to the beginning

or end of *this's sequence of active blocks, or interlaced in

some way (for example to order blocks by their capacity from small to

large) is implementation-defined. Better performance may be gained in some

cases by allowing the source's active blocks to go to the front rather than

the back, depending on how full the final active block in x

is. This is because unused elements that are not at the back of hive's

iterative sequence will need to be marked as skipped in some way, and

skipping over large numbers of elements will incur a small performance

disadvantage during iteration compared to skipping over a small number of

elements, due to memory locality.

This function may throw in three ways - the first is a

length_error exception if any of the capacities of

x's active blocks are outside of *this's block capacity

limits. The second is an exception if the allocators of the

two hives are different. Third is a potential bad_alloc in the case of a

vector-of-pointers-to-blocks style of implementation, where an

allocation may be made if *this's pointer vector isn't of

sufficient capacity to accomodate the pointers to x's active blocks.

For that scenario the time complexity (to expand the vector and reallocate all pointers) is linear in the number of the element blocks in *this + the number of active blocks in x. But regardless of implementation, a check needs to be

made as to whether the x's active blocks are within *this's current block limits. This check may be O(1) or O(n) in the number of x's active blocks depending on the values of *this's and x's current limits (same logic as reshape() above).

Final note: reserved blocks in x are not transferred into *this. This was decided by LEWG to be non-implementation defined in

order to not create unexpected behaviour when moving from one implementation to another. An implementation may need to count the amount of capacity stored in reserved blocks in the two hive instances in order to correct the total capacity values of both, which may involve a traversal of reserved blocks.

Suggested location of hive in the standard is Sequence Containers.

<algorithm>

<any>

<array>

<atomic>

<barrier>

<bit>

<bitset>

<charconv>

<chrono>

<codecvt>

<compare>

<complex>

<concepts>

<condition_variable>

<coroutine>

<deque>

<exception>

<execution>

<expected>

<filesystem>

<flat_map>

<flat_set>

<format>

<forward_list>

<fstream>

<functional>

<future>

<generator>

<hazard_pointer>

<hive>

<initializer_list>

<inplace_vector>

<iomanip>

<ios>

<iosfwd>

<iostream>

<istream>

<iterator>

<latch>

<limits>

<list>

<locale>

<map>

<mdspan>

<memory>

<memory_resource>

<mutex>

<new>

<numbers>

<numeric>

<optional>

<ostream>

<print>

<queue>

<random>

<ranges>

<ratio>

<regex>

<scoped_allocator>

<semaphore>

<set>

<shared_mutex>

<source_location>

<span>

<spanstream>

<sstream>

<stack>

<stacktrace>

<stdexcept>

<stdfloat>

<stop_token>

<streambuf>

<string>

<string_view>

<strstream>

<syncstream>

<system_error>

<thread>

<tuple>

<type_traits>

<typeindex>

<typeinfo>

<unordered_map>

<unordered_set>

<utility>

<valarray>

<variant>

<vector>

<version>

#define __cpp_lib_hive ?????? // also in

<hive>

| Subclause | Header |

| Requirements | |

| Sequence containers | <array>, <deque>, <forward_list>, <hive>, <inplace_vector>, <list>, <vector> |

| Associative containers | <map>, <set> |

| Unordered associative containers | <unordered_map>, <unordered_set> |

| Container adaptors | <flat_map>, <flat_set>, <queue>, <stack> |

| Views | <span>, <mdspan> |

<hive> synopsis [hive.syn]

// [hive] class template hive

#include <initializer_list> // see [initializer.list.syn]

#include <compare> // see [compare.syn]

namespace std {

struct hive_limits

{

size_t min;

size_t max;

constexpr hive_limits(size_t minimum, size_t maximum) noexcept : min(minimum), max(maximum) {}

};

// class template hive

template <class T, class Allocator = allocator<T>> class hive;

template<class T, class Allocator>

void swap(hive<T, Allocator>& x, hive<T, Allocator>& y)

noexcept(noexcept(x.swap(y)));

template<class T, class Allocator, class U = T>

typename hive<T, Allocator>::size_type

erase(hive<T, Allocator>& c, const U& value);

template<class T, class Allocator, class Predicate>

typename hive<T, Allocator>::size_type

erase_if(hive<T, Allocator>& c, Predicate pred);

namespace pmr {

template <class T>

using hive = std::hive<T, polymorphic_allocator<T>>;

}

}

hive [hive]reserve.(5.1) — The minimum limit shall be no larger than the maximum limit.

(5.2) — When limits are not specified by a user during construction, the implementation's default limits are used.

(5.3) — The default limits of an

implementation are not guaranteed to be the same as the minimum and maximum

possible capacities for an implementation's element blocks [Note 1: To

allow latitude for both implementation-specific and user-directed

optimization. - end note]. The latter are defined as hard limits. The maximum hard limit shall be no

larger than std::allocator_traits<Allocator>::max_size().

(5.4) — If user-specified limits are not within hard limits, or if the specified minimum limit is greater than the specified maximum limit, behavior is undefined.

(5.5) — An element block is said to be within the bounds of a pair of minimum/maximum limits when its capacity is greater-or-equal-to the minimum limit and less-than-or-equal-to the maximum limit.

== and !=. A hive also meets

the requirements of a reversible container ([container.rev.reqmts]), of an

allocator-aware container ([container.alloc.reqmts]), and some of the

requirements of a sequence container, including several of the optional

sequence container requirements ([sequence.reqmts]). Descriptions are

provided here only for operations on hive that are not described in that

table or for operations where there is additional semantic information.three_way_comparable<strong_ordering>.namespace std {

template<class T, class Allocator = allocator<T>>

class hive {

public:

// types

using value_type = T;

using allocator_type = Allocator;

using pointer = typename allocator_traits<Allocator>::pointer;

using const_pointer = typename allocator_traits<Allocator>::const_pointer;

using reference = value_type&;

using const_reference = const value_type&;

using size_type = implementation-defined; // see [container.requirements]

using difference_type = implementation-defined; // see [container.requirements]

using iterator = implementation-defined; // see [container.requirements]

using const_iterator = implementation-defined; // see [container.requirements]

using reverse_iterator = std::reverse_iterator<iterator>; // see [container.requirements]

using const_reverse_iterator = std::reverse_iterator<const_iterator>; // see [container.requirements]

// [hive.cons] construct/copy/destroy

constexpr hive() noexcept(noexcept(Allocator())) : hive(Allocator()) { }

constexpr explicit hive(const Allocator&) noexcept;

constexpr explicit hive(hive_limits block_limits) : hive(block_limits, Allocator()) { }

constexpr hive(hive_limits block_limits, const Allocator&);

explicit hive(size_type n, const Allocator& = Allocator());

hive(size_type n, hive_limits block_limits, const Allocator& = Allocator());

hive(size_type n, const T& value, const Allocator& = Allocator());

hive(size_type n, const T& value, hive_limits block_limits, const Allocator& = Allocator());

template<class InputIterator>

hive(InputIterator first, InputIterator last, const Allocator& = Allocator());

template<class InputIterator>

hive(InputIterator first, InputIterator last, hive_limits block_limits, const Allocator& = Allocator());

template<container-compatible-range<T> R>

hive(from_range_t, R&& rg, const Allocator& = Allocator());

template<container-compatible-range<T> R>

hive(from_range_t, R&& rg, hive_limits block_limits, const Allocator& = Allocator());

hive(const hive& x);

hive(hive&&) noexcept;

hive(const hive& x, const type_identity_t<Allocator>& alloc);

hive(hive&&, const type_identity_t<Allocator>& alloc);

hive(initializer_list<T> il, const Allocator& = Allocator());

hive(initializer_list<T> il, hive_limits block_limits, const Allocator& = Allocator());

~hive();

hive& operator=(const hive& x);

hive& operator=(hive&& x) noexcept(allocator_traits<Allocator>::propagate_on_container_move_assignment::value || allocator_traits<Allocator>::is_always_equal::value);

hive& operator=(initializer_list<T>);

template<class InputIterator>

void assign(InputIterator first, InputIterator last);

template<container-compatible-range <T> R>

void assign_range(R&& rg);

void assign(size_type n, const T& t);

void assign(initializer_list<T>);

allocator_type get_allocator() const noexcept;

// iterators

iterator begin() noexcept;

const_iterator begin() const noexcept;

iterator end() noexcept;

const_iterator end() const noexcept;

reverse_iterator rbegin() noexcept;

const_reverse_iterator rbegin() const noexcept;

reverse_iterator rend() noexcept;

const_reverse_iterator rend() const noexcept;

const_iterator cbegin() const noexcept;

const_iterator cend() const noexcept;

const_reverse_iterator crbegin() const noexcept;

const_reverse_iterator crend() const noexcept;

// [hive.capacity] capacity

bool empty() const noexcept;

size_type size() const noexcept;

size_type max_size() const noexcept;

size_type capacity() const noexcept;

void reserve(size_type n);

void shrink_to_fit();

void trim_capacity() noexcept;

void trim_capacity(size_type n) noexcept;

constexpr hive_limits block_capacity_limits() const noexcept;

static constexpr hive_limits block_capacity_default_limits() noexcept;

static constexpr hive_limits block_capacity_hard_limits() noexcept;

void reshape(hive_limits block_limits);

// [hive.modifiers] modifiers

template<class... Args> iterator emplace(Args&&... args);

template<class... Args> iterator emplace_hint(const_iterator hint, Args&&... args);

iterator insert(const T& x);

iterator insert(T&& x);

iterator insert(const_iterator hint, const T& x);

iterator insert(const_iterator hint, T&& x);

void insert(initializer_list<T> il);

template<container-compatible-range <T> R>

void insert_range(R&& rg);

template<class InputIterator>

void insert(InputIterator first, InputIterator last);

void insert(size_type n, const T& x);

iterator erase(const_iterator position);

iterator erase(const_iterator first, const_iterator last);

void swap(hive&) noexcept(allocator_traits<Allocator>::propagate_on_container_swap::value || allocator_traits<Allocator>::is_always_equal::value);

void clear() noexcept;

// [hive.operations] hive operations

void splice(hive& x);

void splice(hive&& x);

template<class BinaryPredicate = equal_to<T>>

size_type unique(BinaryPredicate binary_pred = BinaryPredicate());

template<class Compare = less<T>>

void sort(Compare comp = Compare());

iterator get_iterator(const_pointer p) noexcept;

const_iterator get_iterator(const_pointer p) const noexcept;

private:

hive_limits current-limits = implementation-defined; // exposition only

};

template<class InputIterator, class Allocator = allocator<iter-value-type <InputIterator>>

hive(InputIterator, InputIterator, Allocator = Allocator())

-> hive<iter-value-type <InputIterator>, Allocator>;

template<class InputIterator, class Allocator = allocator<iter-value-type <InputIterator>>

hive(InputIterator, InputIterator, hive_limits block_limits, Allocator = Allocator())

-> hive<iter-value-type <InputIterator>, block_limits, Allocator>;

template<ranges::input_range R, class Allocator = allocator<ranges::range_value_t<R>>>

hive(from_range_t, R&&, Allocator = Allocator())

-> hive<ranges::range_value_t<R>, Allocator>;

template<ranges::input_range R, class Allocator = allocator<ranges::range_value_t<R>>>

hive(from_range_t, R&&, hive_limits block_limits, Allocator = Allocator())

-> hive<ranges::range_value_t<R>, block_limits, Allocator>;

}

constexpr explicit hive(const Allocator&) noexcept;

hive, using the specified allocator.

constexpr hive(hive_limits block_limits, const Allocator&);

hive, using the specified allocator. Initializes current-limits with block_limits.

explicit hive(size_type n, const Allocator& = Allocator());

hive(size_type n, hive_limits block_limits, const Allocator& = Allocator());

T is Cpp17DefaultInsertable into

hive.hive with n default-inserted elements, using

the specified allocator. If the second overload is called, also initializes current-limits with block_limits.n.

hive(size_type n, const T& value, const Allocator& = Allocator());

hive(size_type n, const T& value, hive_limits block_limits, const Allocator& = Allocator());

T is Cpp17CopyInsertable into

hive.hive with n copies of value, using

the specified allocator. If the second overload is called, also initializes current-limits with block_limits.n.

template<class InputIterator>

hive(InputIterator first, InputIterator last, const Allocator& = Allocator());

template<class InputIterator>

hive(InputIterator first, InputIterator last, hive_limits block_limits, const Allocator& = Allocator());hive equal to the range [first, last), using the specified allocator.

If the second overload is called, also initializes current-limits with block_limits.distance(first, last).

template<container-compatible-range<T> R>

hive(from_range_t, R&& rg, const Allocator& = Allocator());

template<container-compatible-range<T> R>

hive(from_range_t, R&& rg, hive_limits block_limits, const Allocator& = Allocator());hive object with the elements of the range rg, using the specified allocator. If the second overload is called, also initializes current-limits with block_limits.ranges::distance(rg).

hive(const hive& x);

hive(const hive& x, const type_identity_t<Allocator>& alloc);

T is Cpp17CopyInsertable into hive.hive object with the elements of x. If the second overload is called, uses alloc. Initializes current-limits with x.current-limits.x.size().

hive(hive&& x);

hive(hive&& x, const type_identity_t<Allocator>& alloc);

allocator_traits<alloc>::is_always_equal::value is false, T meets the Cpp17MoveInsertable requirements.alloc == x.get_allocator() is true, current-limits is set to x.current-limits and each element block is moved from x into *this. Pointers and references to the elements of x now refer to those same elements but as members of *this. Iterators referring to the elements of x will continue to refer to their elements, but they now behave as iterators into *this.alloc == x.get_allocator() is false, each element in x is moved into *this. References, pointers and iterators referring to the elements of x, as well as the past-the-end iterator of x, are invalidated.x.empty() is true.alloc == x.get_allocator() is false, linear in x.size(). Otherwise constant.

hive(initializer_list<T> il, const Allocator& = Allocator());

hive(initializer_list<T> il, hive_limits block_limits, const Allocator& = Allocator());

T is Cpp17CopyInsertable into hive.hive object with the elements of il, using the specified allocator. If the second overload is called, also initializes current-limits with block_limits.il.size().

hive& operator=(const hive& x);

T is Cpp17CopyInsertable into hive and Cpp17CopyAssignable.*this are either copy-assigned to, or destroyed. All elements in x are copied into *this.current-limits is unchanged. - end note]size() + x.size().

hive& operator=(hive&& x) noexcept(allocator_traits<Allocator>::propagate_on_container_move_assignment::value || allocator_traits<Allocator>::is_always_equal::value);

(allocator_traits<Allocator>::propagate_on_container_move_assignment::value || allocator_traits<Allocator>::is_always_equal::value) is false, T is Cpp17MoveInsertable into hive and Cpp17MoveAssignable.*this is either move-assigned to, or destroyed.(allocator_traits<Allocator>::propagate_on_container_move_assignment::value || get_allocator() == x.get_allocator()) is true, current-limits is set to x.current-limits and each element block is moved from x into *this. Pointers and references to the elements of x now refer to those same elements but as members of *this. Iterators referring to the elements of x will continue to refer to their elements, but they now behave as iterators into *this, not into x.(allocator_traits<Allocator>::propagate_on_container_move_assignment::value || get_allocator() == x.get_allocator()) is false, each element in x is moved into *this. References, pointers and iterators referring to the elements of x, as well as the past-the-end iterator of x, are invalidated.x.empty() is true.size(). If (allocator_traits<Allocator>::propagate_on_container_move_assignment::value || get_allocator() == x.get_allocator()) is true, also linear in x.size().size_type capacity() const noexcept;

*this can hold without requiring allocation of more element blocks.void reserve(size_type n);

n <= capacity() is true there are no effects. Otherwise increases capacity() by allocating reserved blocks.capacity() >= n is true.length_error if n > max_size(), as well as any exceptions thrown by the allocator.*this, as well as the past-the-end iterator, remain valid.void shrink_to_fit();

T is Cpp17MoveInsertable into

hive.shrink_to_fit is a non-binding request to reduce

capacity() to be closer to size().capacity(), but may reduce

capacity(). It may reallocate elements. If

capacity() is already equal to size() there are

no effects. If an exception is thrown during allocation of a new element block,

capacity() may be reduced and reallocation may occur. Otherwise if an exception is thrown the effects are unspecified.*this may change and all references, pointers, and iterators referring to the elements in *this, as well as the past-the-end iterator, are invalidated.void trim_capacity() noexcept;

void trim_capacity(size_type n) noexcept;

capacity() is reduced accordingly. For the second overload,

capacity() is reduced to no less than n.

*this, as well as the past-the-end iterator,

remain valid.constexpr hive_limits block_capacity_limits() const noexcept;

current-limits.static constexpr hive_limits block_capacity_default_limits() noexcept;

hive_limits struct with the min and

max members set to the implementation's default limits.static constexpr hive_limits block_capacity_hard_limits() noexcept;

hive_limits struct with the min and

max members set to the implementation's hard limits.void reshape(hive_limits block_limits);

T is Cpp17MoveInsertable into hive.

block_limits, the elements within those active blocks are reallocated to new or existing element blocks which are within the bounds. Any element blocks not within the bounds of block_limits are deallocated.

If an exception is thrown during allocation of a new element block,

capacity() may be reduced, reallocation may occur and current-limits may be assigned a value other than

block_limits. Otherwise block_limits is assigned to current-limits. If any other exception is thrown the effects are unspecified.size() is unchanged.*this. If reallocation happens, also linear in the number of elements reallocated.capacity(). If

reallocation happens, the order of the elements in *this may

change. Reallocation invalidates all references, pointers, and

iterators referring to the elements in *this, as well as the

past-the-end iterator.

template<class... Args> iterator emplace(Args&&... args);

template<class... Args> iterator emplace_hint(const_iterator hint, Args&&... args);T is Cpp17EmplaceConstructible into hive from args.T constructed with std::forward<Args>(args)....hint parameter is ignored. If an exception is thrown, there are no effects.args can directly or indirectly refer to a value in *this. - end note]T is constructed.

iterator insert(const T& x);

iterator insert(const_iterator hint, const T& x);

iterator insert(T&& x);

iterator insert(const_iterator hint, T&& x);

return emplace(std::forward<decltype(x)>(x));hint parameter is ignored. - end note]

void insert(initializer_list<T> rg);

template<container-compatible-range <T> R>

void insert_range(R&& rg);T is Cpp17EmplaceInsertable into hive from *ranges::begin(rg). rg and *this do not overlap.rg. Each iterator in the range rg is dereferenced exactly once.T is constructed for each element inserted.

void insert(size_type n, const T& x);

T is Cpp17CopyInsertable into hive.n copies of x.n. Exactly one object of type T is constructed for each element inserted.

template<class InputIterator>

void insert(InputIterator first, InputIterator last);insert_range(ranges::subrange(first, last)).iterator erase(const_iterator

position);

iterator erase(const_iterator first, const_iterator last);

*this also

invalidates the past-the-end iterator.void swap(hive& x)

noexcept(allocator_traits<Allocator>::propagate_on_container_swap::value

|| allocator_traits<Allocator>::is_always_equal::value);

capacity() and current-limits of

*this with that of x.i + n and i

- n, where i is an iterator into the hive

and n is an integer, are the same as those of next(i,

n) and prev(i, n), respectively. For sort,

the definitions and requirements in [alg.sorting] apply.void splice(hive& x);

void splice(hive&& x);

get_allocator() == x.get_allocator() is true.addressof(x) == this is true the behavior is erroneous and there are no effects.

Otherwise, inserts the contents of x into *this

and x becomes empty. Pointers and references to the moved

elements of x now refer to those same elements but as members

of *this. Iterators referring to the moved elements

continue to refer to their elements, but they now behave as iterators into

*this, not into x.x

plus all element blocks in *this.length_error if any of x's active

blocks are not within the bounds of

current-limits.x are not transferred into

*this. If addressof(x) == this is false, invalidates the past-the-end iterator for both

x and *this.

template<class BinaryPredicate = equal_to<T>>

size_type unique(BinaryPredicate binary_pred = BinaryPredicate());binary_pred is an equivalence relation.hive, erases all

elements referred to by the iterator i in the range

[begin() + 1, end()) for which binary_pred(*i, *(i - 1)) is true.empty() is false, exactly size() -

1 applications of the corresponding predicate, otherwise no

applications of the predicate.*this is erased, also invalidates the past-the-end iterator.

template<class Compare = less<T>>

void sort(Compare comp = Compare());T is Cpp17MoveInsertable into hive, Cpp17MoveAssignable, and Cpp17Swappable.*this according to the comp function object. If an exception is

thrown, the order of the elements in *this is unspecified.size().*this, as well as the past-the-end iterator, may be invalidated.iterator get_iterator(const_pointer p) noexcept;

const_iterator get_iterator(const_pointer p) const noexcept;

p points to an element in

*this.*this.iterator or const_iterator pointing

to the same element as p.

template<class T, class Allocator, class U>

typename hive<T, Allocator>::size_type

erase(hive<T, Allocator>& c, const U& value);return erase_if(c, [&](auto& elem) { return elem == value; });

template<class T, class Allocator, class Predicate>

typename hive<T, Allocator>::size_type

erase_if(hive<T, Allocator>& c, Predicate pred);

auto original_size = c.size();

for (auto i = c.begin(), last = c.end(); i != last; ) {

if (pred(*i)) {

i = c.erase(i);

} else {

++i;

}

}

return original_size - c.size();

Affected subclause: [headers]

Change: New headers.

Rationale: New functionality.

Effect on original feature: The following C++ headers are new: <debugging>, <hazard_pointer>, <hive>, <inplace_vector>, <linalg>, <rcu>, and <text_encoding>.

Valid C++ 2023 code that #includes headers with these names may be invalid in this revision of C++.

Matt would like to thank: Glen Fernandes and Ion Gaztanaga for restructuring

advice, Robert Ramey for documentation advice, various Boost and SG14/LEWG/LWG

members for support, critiques and corrections, Baptiste Wicht for teaching me

how to construct decent benchmarks, Jonathan Wakely, Sean Middleditch, Jens

Maurer (very nearly a co-author at this point really), Tim Song, Patrice Roy

and Guy Davidson for standards-compliance advice and critiques, support,

representation at meetings and bug reports, Henry Miller for getting me to

clarify why the free list approach to memory location reuse is the most

appropriate, Ville Voutilainen and Gašper Ažman for help with the

colony/hive rename paper, Ben Craig for his critique of the tech spec, that

ex-Lionhead guy for annoying me enough to force me to implement the original

skipfield pattern, Jon Blow for some initial advice and Mike Acton for some

influence, the community at large for giving me feedback and bug reports on the

reference implementation.

Also Nico Josuttis for doing such a great job in terms of explaining the

general format of the structure to the committee.

Dedicated to Melodie.

Using plf::hive reference implementation.

#include <iostream>

#include <numeric>

#include "plf_hive.h"

int main(int argc, char **argv)

{

plf::hive<int> i_hive;

// Insert 100 ints:

for (int i = 0; i != 100; ++i)

{

i_hive.insert(i);

}

// Erase half of them:

for (plf::hive<int>::iterator it = i_hive.begin(); it != i_hive.end(); ++it)

{

it = i_hive.erase(it);

}

std::cout << "Total: " << std::accumulate(i_hive.begin(), i_hive.end(), 0) << std::endl;

std::cin.get();

return 0;

} #include <iostream>

#include "plf_hive.h"

int main(int argc, char **argv)

{

plf::hive<int> i_hive;

plf::hive<int>::iterator it;

plf::hive<int *> p_hive;

plf::hive<int *>::iterator p_it;

// Insert 100 ints to i_hive and pointers to those ints to p_hive:

for (int i = 0; i != 100; ++i)

{

it = i_hive.insert(i);

p_hive.insert(&(*it));

}

// Erase half of the ints:

for (it = i_hive.begin(); it != i_hive.end(); ++it)

{

it = i_hive.erase(it);

}

// Erase half of the int pointers:

for (p_it = p_hive.begin(); p_it != p_hive.end(); ++p_it)

{

p_it = p_hive.erase(p_it);

}

// Total the remaining ints via the pointer hive (pointers will still be valid even after insertions and erasures):

int total = 0;

for (p_it = p_hive.begin(); p_it != p_hive.end(); ++p_it)

{

total += *(*p_it);

}

std::cout << "Total: " << total << std::endl;

if (total == 2500)

{

std::cout << "Pointers still valid!" << std::endl;

}

std::cin.get();

return 0;

} Benchmark results for plf::colony (performance and majority of code is identical to std::hive reference implementation) under GCC 9.2 on an Intel Xeon E3-1241 (Haswell 2014) are here.

Old benchmark results for an earlier version of colony under MSVC 2015 update 3, on an Intel Xeon E3-1241 (Haswell 2014) are here. There is no commentary for the MSVC results.

Even older benchmark results for an even earlier version of colony under GCC

5.1 on an Intel E8500 (Core2 2008) are here.

This proposal and its reference implementation, and the original reference implementation, have several differences; one is that the original was named 'colony' (as in: a human, ant or bird colony), and that name has been retained for userbase purposes but also for differentiation. Other differences between hive and colony as of the time of writing follow:

Other differences may appear over time.

See the guide to container selection appendix for a more intensive answer to this question, however for a brief overview, it is worthwhile for performance reasons in situations where the order of container elements is not important and:

Under these circumstances a hive will generally out-perform other std:: containers. In addition, because it never invalidates pointer references to container elements (except when the element being pointed to has been previously erased) it may make many programming tasks involving inter-relating structures in an object-oriented or modular environment much faster, and should be considered in those situations.

Some ideal situations to use a hive: cellular/atomic simulation, persistent octtrees/quadtrees, game entities or destructible-objects in a video game, particle physics, anywhere where objects are being created and destroyed continuously. Also, anywhere where a vector of pointers to dynamically-allocated objects or a std::list would typically end up being used in order to preserve pointer stability, but where order is unimportant.

A deque is reasonably dissimilar to a hive - being a double-ended queue, it requires a different internal framework. In addition, being a random-access container, having a growth factor for element blocks in a deque is problematic (though not impossible). deque and hive have no comparable performance characteristics except for insertion (assuming a good deque implementation). Deque erasure performance can vary substantially depending on implementation, but is generally similar to vector erasure performance. A deque invalidates pointers to subsequent container elements when erasing elements, which a hive does not, and guarantees ordered insertion.

Both a slot map and a hive attempt to create a container where there is reasonable insertion/erasure/iteration performance while maintaining stable links from external objects to elements within the container. In the case of hive this is done with pointers/iterators, in the case of a slot map this is done with keys, which are separate to iterators (which do not stay valid post-erasure/insertion in slot maps). Each approach has some advantages, but the hive approach has more, in my view.

If you use a slot map your external object also needs to store a link to the slot map in order to access an element from its stored key (or a higher-level object accessing the external object needs to store such a link). This prevents splicing, since there is no way to ensure that keys in one slot map are unique globally, as is possible with pointers. With a hive there is no need for the external object to have knowledge of the hive instance, as pointers are sufficient to access the elements and remain valid regardless of insertion/erasure.

One upside of the slot map approach is if you make a duplicate of a slot map + a duplicate of an external object which accesses its elements via keys, and send these to a secondary thread, little work need be done - all the keys stored in the external object duplicate will work with the copied slot map, all you need to do is update the external object to use the duplicate slot map, instead of the original.

If you wish to do this with a hive, pointers to its elements in the duplicate external object will of course not point into the duplicate hive, so any external objects you copy to the secondary thread which you intend to access the hive elements, will need their pointers re-written. The easiest way to do this is by finding the indexes of the elements in the original hive instance via size_type original_index = std::distance(hive.begin(), hive.get_iterator(external_object.pointers[x]));, then using external_object_copy.pointers[x] = &*(std::next(hive_copy.begin(), original_index)); to write the new pointer values. Since std::distance/std::next/etc can be overloaded to be very quick for hive, this will not take too much time, but it is an inconvenience.

While I haven't done any benchmarks comparing hive performance to a slot map, I have done extensive benchmarks vs a packed array implementation, which is arguably simpler than a slot map but has the same characteristics, and hive is faster. From the structure of a slot map its obvious that slot maps are slower for insertion, erasure and for referencing elements within the slot map via external objects. This is because of the intermediary interfaces of the key resolution array and generation counters which need to be accessed and updated. Slot maps can however be faster for iteration since all their data is (typically, implementation-defined) stored contiguously and iteration does not use the keys/counters. In addition contiguous storage means using a slot map with SIMD is more straightforward.

Slot maps use more memory due to the keys/counters. Ignoring element block metadata, a hive implementation can use as little as 2 extra bits of metadata per element (current reference implementation is generally between 10 and 16 bits for performance reasons), but a slot map will typically use between 64 and 128 bits per element (or 32-64 on a 32-bit system). This will also lower performance due to higher pressure on the cache and the increased numbers of allocations/deallocations.

Unlike a std::vector, a hive can be read from and inserted-into/erased-from at the same time (provided the erased element is not the same as the element being read), however it cannot be iterated over and inserted-into/erased-from to at the same time. If we look at a (non-concurrent implementation of) std::vector's thread-safe matrix to see which basic operations can occur at the same time, it reads as follows (please note push_back() is the same as insertion in this regard due to reallocation when size == capacity):

| std::vector | Insertion | Erasure | Iteration | Read |

| Insertion | No | No | No | No |

| Erasure | No | No | No | No |

| Iteration | No | No | Yes | Yes |

| Read | No | No | Yes | Yes |

In other words, multiple reads can happen simultaneously, but the potential reallocation and pointer/iterator invalidation caused by insertion/push_back and erasure means those operations cannot occur at the same time as anything else. pop_back() is slightly different in that it doesn't cause reallocation, so pop_back()'s and reads can occur at the same time provided you're not reading from the back(). Likewise a swap-and-pop operation can occur at the same time as reading if neither the erased location nor back() is what's being read.

Hive on the other hand does not invalidate pointers/iterators to non-erased elements during insertion and erasure, resulting in the following matrix:

| hive | Insertion | Erasure | Iteration | Read |

| Insertion | No | No | No | Yes |

| Erasure | No | No | No | Mostly* |

| Iteration | No | No | Yes | Yes |

| Read | Yes | Mostly* | Yes | Yes |

* Erasures will not invalidate iterators unless the iterator points to the erased element.

In other words, reads may occur at the same time as insertions and erasures (provided that the element being erased is not the element being read), multiple reads and iterations may occur at the same time, but iterations may not occur at the same time as an erasure or insertion, as either of these may change the state of the skipfield which is being iterated over, if a skipfield is used in the implementation. Note that iterators pointing to end() may be invalidated by insertion.

So, hive could be considered more inherently thread-safe than a (non-concurrent implementation of) std::vector, but still has many areas which require mutexes or atomics to navigate in a multithreaded environment.

Though I am happy to be proven wrong I suspect hives/colonies/bucket arrays are their own abstract data type. Some have suggested its ADT is of type bag, I would somewhat dispute this as it does not have typical bag functionality such as searching based on value (you can use std::find but it's O(n)) and adding this functionality would slow down other performance characteristics. Multisets/bags are also not sortable (by means other than automatically by key value). Hive does not utilize key values, is sortable, and does not provide the sort of functionality frequently associated with a bag (e.g. counting the number of times a specific value occurs).

Two reasons:

++

and -- iterator operations become O(n) in the number of

active blocks, making them non-compliant with the C++ standard. At the

moment they are O(1); in the reference implementation this

constitutes typically one update for both skipfield and element

pointers, two if a skipfield jump takes the iterator beyond the

bounds of the current block and into the next block. But if empty

blocks are allowed, there could be any number of empty blocks between

the current element and the next one with elements in it. Essentially you get the same scenario

as you do when iterating over a boolean skipfield.Future implementations may find better strategies, and it is best not to overly constraint implementation. For the reasons described in the Design Decisions->Specific Functions section on erase(), retaining the current two back blocks has performance and latency benefits. Therefore reserving no active blocks is non-optimal. Meanwhile, reserving All active blocks is bad for performance as many small active blocks will be reserved, which decreases iterative performance due to lower cache locality. If a user wants more fine-grained control over memory retention they may use an allocator.

The user must obtain the block capacity hard limits of the implementation (via block_capacity_hard_limits()) prior to supplying their own limits as part of a constructor or reshape(), so that they do not trigger undefined behavior by supplying limits which are outside of the hard limits. Hence it was perceived by LEWG that there would be no reason for a hive_limits struct to ever be used with non-user-supplied values eg. zero.

There are 'hard' capacity limits, 'default' capacity limits, 'current' limits and user-defined capacity limits. Current limits are whatever the current min/max capacity limits are in a given instance of hive. Default limits are what a hive is instantiated with if user-defined capacity limits are not supplied. Both default limits and user-defined limits are not allowed to go outside of an implementation's hard limits, which represent a fixed upper and lower limit. New element blocks have an implementation-defined growth factor, so will expand up to the current max limit.

While implementations are free to chose their own limits and strategies, in the reference implementation element block sizes start from either the dynamically-defined default minimum size (8 elements, larger if the type stored is small) or an amount defined by the user (with a minimum of 3 elements, as less than 3 elements is pretty much a linked list with more waste per-node.

Subsequent block capacities in the reference implementation increase the total capacity of the hive by a factor of 2 (so, 1st block 8 elements, 2nd 8 elements, 3rd 16 elements, 4th 32 elements etcetera) until the current maximum block size is reached. The default maximum block size in the reference implementation is 255 (if the type sizeof is < 10 bytes) or 8192. These values are based on multiple benchmark comparisons between different maximum block capacities, with different sized types. For larger-than-10-byte types the skipfield bitdepth is (at least) 16 so the maximum capacity 'hard' limit would be 65535 elements in that context, for < 10-byte types the skipfield bitdepth is (at least) 8, making the maximum capacity hard limit 255. Larger capacities do not necessarily perform better because, given a randomized erasure pattern, a larger block may statistically retain more erased elements ie. empty space before it runs out of elements entirely and is removed from the sequence, and therefore can create slowdown during iteration due to low locality.

See the summary in paper P2857R0 which goes into this.

Yes if you're careful, no if you're not.

On platforms which support scatter and gather operations via hardware (e.g.

AVX512) you can use hive with SIMD as much as you want, using gather to

load elements from disparate or sequential locations, directly into a SIMD

register, in parallel. Then use scatter to push the post-SIMD-process

values back to their original locations.